Tencent's New AI Video Generator Takes On OpenAI's Sora For Free

Tencent says its model is better than Runway Gen-3, Luma 1.6, and three leading Chinese video generation tools, according to human tests.

While OpenAI keeps teasing Sora after months of delays, Tencent quietly dropped a model that is already showing comparable results to existing top-tier video generators.

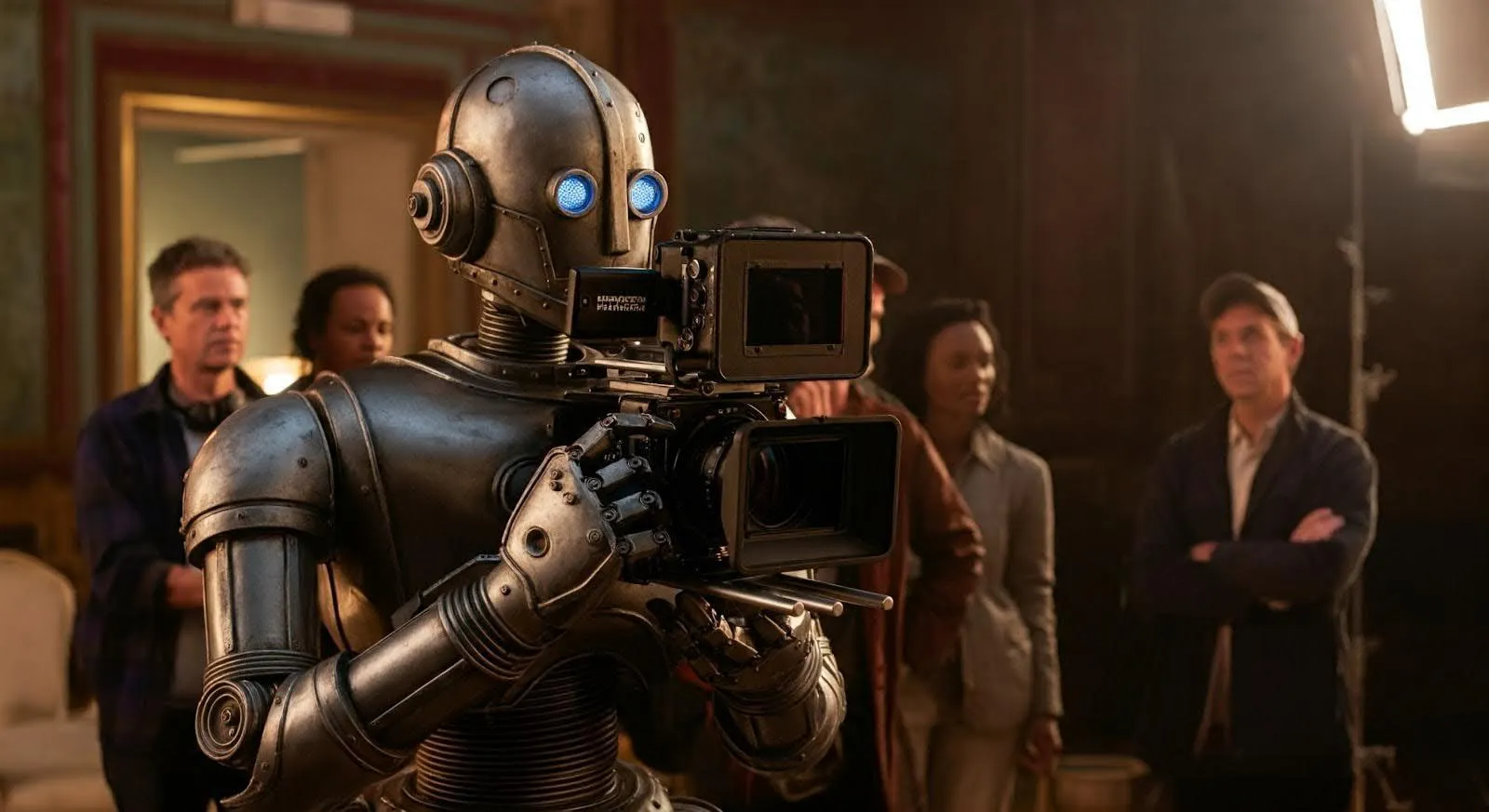

Tencent has unveiled Hunyuan Video, a free and open-source AI video generator, strategically timed during OpenAI's 12-day announcement campaign, which is widely anticipated to include the debut of Sora, its highly anticipated video tool.

“We present Hunyuan Video, a novel open-source video foundation model that exhibits performance in video generation that is comparable to, if not superior to, leading closed-source models,” Tencent said in its official announcement.

The Shenzhen, China-based tech giant claims its model “outperforms” those of Runway Gen-3, Luma 1.6, and “three top-performing Chinese video generative models” based on professional human evaluation results.

The timing couldn't be more apt.

Before its video generator—somewhere between the SDXL and Flux eras of open-source image generators— Tencent released an image generator with a similar name.

HunyuanDit provided excellent results and improved understanding of bilingual text, but it was not widely adopted. The family was completed with a group of large language models.

Hunyuan Video uses a decoder-only Multimodal Large Language Model as its text encoder instead of the usual CLIP and T5-XXL combo found in other AI video tools and image generators.

Tencent says this helps the model follow instructions better, grasp image details more precisely, and learn new tasks on the fly without additional training—plus, its causal attention setup gets a boost from a special token refiner that helps it understand prompts more thoroughly than traditional models.

It also rewrites prompts to make them richer and increase the quality of its generations. For example, a prompt that simply says “A man walking his dog” can be enhanced including details, scene setup, light conditions, quality artifacts, and race, among other elements.